Azure Definitions - Part 1

Website Visitors:You don’t have to worry about learning azure concepts. Get started here:

Agile: Agility means reacting fast. Cloud agility is the ability to rapidly change an IT infrastructure to adapt to the evolving needs of the business. For example, if your service peaks one month, you can scale to demand and pay a larger bill for the month. If the following month the demand drops, you can reduce the used resources and be charged less. This agility lets you manage your costs dynamically, optimizing spending as requirements change.

Region:

A region is a geographical area on the planet containing at least one, but potentially multiple data centers that are nearby and networked together with a low-latency network. Azure intelligently assigns and controls the resources within each region to ensure workloads are appropriately balanced.

Some services or virtual machine features are only available in certain regions, such as specific virtual machine sizes or storage types. There are also some global Azure services that do not require you to select a particular region, such as Microsoft Azure Active Directory, Microsoft Azure Traffic Manager, and Azure DNS.

Ok, Region is a group of one or more data centers. If I have to create a resource in Azure, where do I create it in a given region? Which data center? This brings availability zones.

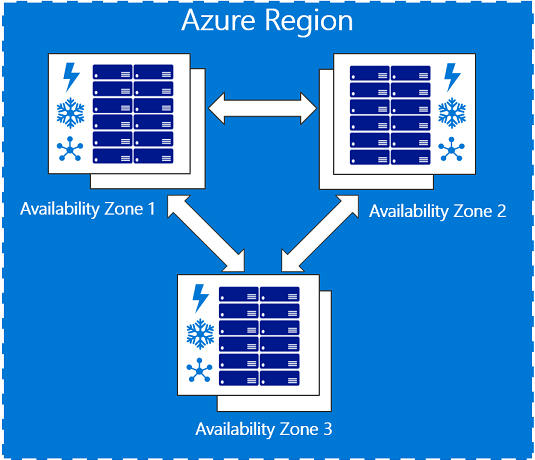

Availability Zone:

Availability Zones are physically separate datacenters within an Azure region. Each Availability Zone is made up of one or more datacenters equipped with independent power, cooling, and networking. It is set up to be an isolation boundary. If one zone goes down, the other continues working. Availability Zones are connected through high-speed, private fiber-optic networks.

Availability Zones are unique physical locations within an Azure region. Each zone is made up of one or more datacenters equipped with independent power, cooling, and networking. To ensure resiliency, there’s a minimum of three separate zones in all enabled regions. The physical separation of Availability Zones within a region protects applications and data from datacenter failures.

With Azure Availability Zones you can choose which Zone within that Azure Region to host a resource. This enables a more granular choice of where and how to host resources within an Azure Region. Each Zone within an Azure Region is essentially a separate datacenter. Each Zone has independent power source, networking, cooling, etc. Each Azure Region with Availability Zones made available will have at minimum 3 separate Zones. This is to ensure maximum resilience and high availability can be enabled within the Azure Region.

Not every region has support for Availability Zones. You have to choose availability zone while you are creating vm. You cant change az after vm is created. You have to export vm and import it again in other AZ in case if you want to change the AZ. Make sure the region u choose has AZ for it. Not all regions has AZ.

Using Availability Zones in your apps

You can use Availability Zones to run mission-critical applications and build high-availability into your application architecture by co-locating your compute, storage, networking, and data resources within a zone and replicating in other zones. Keep in mind that there could be a cost to duplicating your services and transferring data between zones.

Availability Zones are primarily for VMs, managed disks, load balancers, and SQL databases. Azure services that support Availability Zones fall into two categories:

- Zonal services – you pin the resource to a specific zone (for example, virtual machines, managed disks, IP addresses)

- Zone-redundant services – platform replicates automatically across zones (for example, zone-redundant storage, SQL Database).

Availability Zone and Region Look the same?

Each region, like the name indicates, is a separate geographic area. Within each region there are multiple, isolated locations: Availability Zones. Each AZ has at least one data center. AZs have independent power sources, networking, and cooling resources.

Region Pair:

Availability zones are created using one or more datacenters, and there are a minimum of three zones within a single region. However, it’s possible that a large enough disaster could cause an outage big enough to affect even two datacenters. That’s why Azure also creates region pairs.

Each Azure region is always paired with another region within the same geography (such as US, Europe, or Asia) at least 300 miles away. This approach allows for the replication of resources (such as virtual machine storage) across a geography that helps reduce the likelihood of interruptions due to events such as natural disasters, civil unrest, power outages, or physical network outages affecting both regions at once. Region pairs pair with region in same geography. Not in other geographies. Some of the services provide automatic replication to the paired region.

Each Azure region is paired with another region within the same geography, together making a regional pair, which allows replication of resources, and reducing time to recover from service interruptions like a natural disaster. Benefits of Region Pairs:

- Physical isolation – When possible, Azure prefers at least 300 miles of separation between datacenters in a regional pair, although this isn’t practical or possible in all geographies. Physical datacenter separation reduces the likelihood of natural disasters, civil unrest, power outages, or physical network outages affecting both regions at once. Isolation is subject to the constraints within the geography (geography size, power/network infrastructure availability, regulations, etc.).

- Platform-provided replication - Some services such as Geo-Redundant Storage provide automatic replication to the paired region.

- Region recovery order – In the event of a broad outage, recovery of one region is prioritized out of every pair. Applications that are deployed across paired regions are guaranteed to have one of the regions recovered with priority. If an application is deployed across regions that are not paired, recovery might be delayed – in the worst case, the chosen regions may be the last two to be recovered.

- Sequential updates – Planned Azure system updates are rolled out to paired regions sequentially (not at the same time) to minimize downtime, the effect of bugs, and logical failures in the rare event of a bad update.

- Data residency – A region resides within the same geography as its pair (except Brazil South) to meet data residency requirements for tax and law enforcement jurisdiction purposes.

Azure doesn’t enable replication of your resources by default. You have to enable replication on the resources in your region so that they are replicated to other region in the same region pair (not in any region in other region pairs or geographies).

There is one exception to this pairing; Brazil South must be replicated to South Central US because there is only one region in Brazil or South America. There are some special Azure regions like Azure Government, Azure Germany, Azure China, etc that are used for specific purposes only.

Geography:

Azure divides the world into geographies that are defined by geopolitical boundaries or country borders. An Azure geography is a discrete market typically containing two or more regions that preserve data residency and compliance boundaries. This division has several benefits.

- Geographies allow customers with specific data residency and compliance needs to keep their data and applications close.

- Geographies ensure that data residency, sovereignty, compliance, and resiliency requirements are honored within geographical boundaries.

- Geographies are fault-tolerant to withstand complete region failure through their connection to dedicated high-capacity networking infrastructure.

Data residency refers to the physical or geographic location of an organization’s data or information. It defines the legal or regulatory requirements imposed on data based on the country or region in which it resides and is an important consideration when planning out your application data storage.

Geographies are broken up into the following areas:

- Americas

- Europe

- Asia Pacific

- Middle East and Africa

Each region belongs to a single geography and has specific service availability, compliance, and data residency/sovereignty rules applied to it.

Availability Sets:

An availability set is a logical grouping of two or more VMs that help keep your application available during planned or unplanned maintenance.

A planned maintenance event is when the underlying Azure fabric that hosts VMs is updated by Microsoft. A planned maintenance event is done to patch security vulnerabilities, improve performance, and add or update features. Most of the time these updates are done without any impact to the guest VMs. But sometimes VMs require a reboot to complete an update. When the VM is part of an availability set, the Azure fabric updates are sequenced so not all of the associated VMs are rebooted at the same time. VMs are put into different update domains. Update domains indicate groups of VMs and underlying physical hardware that can be rebooted at the same time. Update domains are a logical part of each data center and are implemented with software and logic.

Unplanned maintenance events involve a hardware failure in the data center, such as a power outage or disk failure. VMs that are part of an availability set automatically switch to a working physical server so the VM continues to run. The group of virtual machines that share common hardware are in the same fault domain. A fault domain is essentially a rack of servers. It provides the physical separation of your workload across different power, cooling, and network hardware that support the physical servers in the data center server racks. In the event the hardware that supports a server rack becomes unavailable, only that rack of servers is affected by the outage.

Note: If you don’t choose availability set, Azure doesn’t do it automatically. It doesn’t cost for availability set. So it is recommended to use it. Availability set is for that specific region only. If you created availability set in one region, you should create a vm in that region if you want to use that availability set. If you choose other region, you can’t see that availability set.

Physical rack in your datacenter is called Fault Domain. Group of servers that are updated/patched at same time are called Update Domains.

With an availability set, you get:

- Up to three fault domains that each have a server rack with dedicated power and network resources

- Five logical update domains

Your VMs are then sequentially placed across the fault and update domains. The following diagram shows an example where you have six VMs in an availability set distributed across the two fault domains and five update domains.

There’s no cost for an availability set. You only pay for the VMs within the availability set. We highly recommend that you place each workload in an availability set to avoid a single point of failure in your VM architecture.

virtual machine scale sets:

Azure Virtual Machine Scale Sets let you create and manage a group of identical, load balanced VMs. Imagine you’re running a website that enables scientists to upload astronomy images that need to be processed. If you duplicated the VM, you’d normally need to configure an additional service to route requests between multiple instances of the website. VM Scale Sets could do that work for you.

Scale sets allow you to centrally manage, configure, and update a large number of VMs in minutes to provide highly available applications. The number of VM instances can automatically increase or decrease in response to demand or a defined schedule. With VM Scale Sets, you can build large-scale services for areas such as compute, big data, and container workloads.

Azure Batch:

Use Azure Batch to run large-scale parallel and high-performance computing (HPC) batch jobs efficiently in Azure. Azure Batch creates and manages a pool of compute nodes (virtual machines), installs the applications you want to run, and schedules jobs to run on the nodes. There is no cluster or job scheduler software to install, manage, or scale. Instead, you use Batch APIs and tools, command-line scripts, or the Azure portal to configure, manage, and monitor your jobs.

Developers can use Batch as a platform service to build SaaS applications or client apps where large-scale execution is required. For example, build a service with Batch to run a Monte Carlo risk simulation for a financial services company, or a service to process many images.

There is no additional charge for using Batch. You only pay for the underlying resources consumed, such as the virtual machines, storage, and networking.

Resource Group:

Resource groups enable you to manage all your resources in an application together. Resource groups are containers that allow you to manage the resources required for your application as a single management unit. The resource group allows us to administer all the services, disks, network interfaces, and other elements that potentially make up our solution as a unit.

Resource groups are collection of Azure Resources. Every Azure resource must exist in one (and only one) resource group. Secure at the resource group (RBAC). Resource Group may contain resources from multiple regions. A resource in a Resource Group can access resources in other Resource Groups. If you delete a Resource Group, all the resources in the resource group are deleted.

A resource group is a logical container for resources deployed on Azure. These resources are anything you create in an Azure subscription like virtual machines, Application Gateways, and CosmosDB instances. All resources must be in a resource group and a resource can only be a member of a single resource group. Many resources can be moved between resource groups with some services having specific limitations or requirements to move. Resource groups can’t be nested. Before any resource can be provisioned, you need a resource group for it to be placed in.

A resource can exist in only one resource group. Azure doesn’t backup resource groups. If a resource group is deleted, all resources within that resource group are deleted. You have to backup resource group manually.

Logical grouping

Resource groups exist to help manage and organize your Azure resources. By placing resources of similar usage, type, or location, you can provide some order and organization to resources you create in Azure. Logical grouping is the aspect that you’re most interested in here, since there’s a lot of disorder among our resources.

Life cycle

If you delete a resource group, all resources contained within are also deleted. Organizing resources by life cycle can be useful in non-production environments, where you might try an experiment, but then dispose of it when done. Resource groups make it easy to remove a set of resources at once.

Authorization

Resource groups are also a scope for applying role-based access control (RBAC) permissions. By applying RBAC permissions to a resource group, you can ease administration and limit access to allow only what is needed.

Organizing principles

Resource groups can be organized in a number of ways, let’s take a look at a few examples. You might put all resources that are core infrastructure into this resource group. But you could also organize them strictly by resource type. For example, put all virtual networks in one resource group, all virtual machines in another resource group, and all Azure Cosmos DB instances in yet another resource group.

You could organize them by environment (prod, qa, dev). In this case, all production resources are in one resource group, all test resources are in another resource group, and so on. You could organize them by department (marketing, finance, human resources). Marketing resources go in one resource group, finance in another resource group, and HR in a third resource group. You could even use a combination of these strategies and organize by environment and department. Put production finance resources in one resource group, dev finance resources in another, and the same for the marketing resources.

When you delete a resource group, you delete all the resources within it. Always create a resource group when you create your first VM/application.

- Resource - A manageable item that is available through Azure. Virtual machines, storage accounts, web apps, databases, and virtual networks are examples of resources.

- Resource group - A container that holds related resources for an Azure solution. The resource group includes those resources that you want to manage as a group. You decide how to allocate resources to resource groups based on what makes the most sense for your organization. See Resource groups.

- Resource provider - A service that supplies Azure resources. For example, a common resource provider is Microsoft.Compute, which supplies the virtual machine resource. Microsoft.Storage is another common resource provider. See Resource providers.

- Resource Manager template - A JavaScript Object Notation (JSON) file that defines one or more resources to deploy to a resource group or subscription. The template can be used to deploy the resources consistently and repeatedly. See Template deployment.

- Declarative syntax - Syntax that lets you state “Here is what I intend to create” without having to write the sequence of programming commands to create it. The Resource Manager template is an example of the declarative syntax. In the file, you define the properties for the infrastructure to deploy to Azure.

There are some important factors to consider when defining your resource group:

- All the resources in your group should share the same lifecycle. You deploy, update, and delete them together. If one resource, such as a database server, needs to exist on a different deployment cycle it should be in another resource group.

- Each resource can only exist in one resource group.

- You can add or remove a resource to a resource group at any time.

- You can move a resource from one resource group to another group. For more information, see Move resources to new resource group or subscription.

- A resource group can contain resources that are located in different regions.

- A resource group can be used to scope access control for administrative actions.

- A resource can interact with resources in other resource groups. This interaction is common when the two resources are related but don’t share the same lifecycle (for example, web apps connecting to a database).

What are Tags?

Tags are name/value pairs of text data that you can apply to resources and resource groups. Tags allow you to associate custom details about your resource, in addition to the standard Azure properties a resource has the following properties:

- department (like finance, marketing, and more)

- environment (prod, test, dev)

- cost center

- life cycle and automation (like shutdown and startup of virtual machines)

A resource can have up to 50 tags. The name is limited to 512 characters for all types of resources except storage accounts, which have a limit of 128 characters. The tag value is limited to 256 characters for all types of resources. Tags aren’t inherited from parent resources. Not all resource types support tags, and tags can’t be applied to classic resources.

Tags can be added and manipulated through the Azure portal, Azure CLI, Azure PowerShell, Resource Manager templates, and through the REST API. You can use Azure Policy to automatically add or enforce tags for resources your organization creates based on policy conditions that you define. For example, you could require that a value for the Department tag is entered when someone in your organization creates a virtual network in a specific resource group.

Note: Not all resources support tags, so you will want to confirm that your resource type supports them. Tags aren’t inherited from parent resources.

Azure Resource Manager:

Azure Resource Manager is the deployment and management service for Azure. It provides a consistent management layer that enables you to create, update, and delete resources in your Azure subscription. You can use its access control, auditing, and tagging features to secure and organize your resources after deployment.

When you take actions through the portal, PowerShell, Azure CLI, REST APIs, or client SDKs, the Azure Resource Manager API handles your request. Because all requests are handled through the same API, you see consistent results and capabilities in all the different tools. All capabilities that are available in the portal are also available through PowerShell, Azure CLI, REST APIs, and client SDKs.

https://docs.microsoft.com/en-us/azure/azure-resource-manager/resource-group-overview

Azure VM Sets:

Azure virtual machine scale sets let you create and manage a group of identical, load-balanced VMs. The number of VM instances can automatically increase or decrease in response to demand or a defined schedule. Scale sets provide high availability to your applications and allow you to centrally manage, configure, and update a large number of VMs. With virtual machine scale sets, you can build large-scale services for areas such as compute, big data, and container workloads.

https://docs.microsoft.com/en-us/azure/virtual-machine-scale-sets/overview

What is Azure compute?

Azure compute is an on-demand computing service for running cloud-based applications. It provides computing resources like multi-core processors and supercomputers via virtual machines and containers. It also provides serverless computing to run apps without requiring infrastructure setup or configuration. The resources are available on-demand and can typically be created in minutes or even seconds. You pay only for the resources you use and only for as long as you’re using them.

There are four common techniques for performing compute in Azure:

- Virtual machines

- Containers

- Azure App Service

- Serverless computing

What are virtual machines?

Virtual machines, or VMs, are software emulations of physical computers. They include a virtual processor, memory, storage, and networking resources. They host an operating system (OS), and you’re able to install and run software just like a physical computer. And by using a remote desktop client, you can use and control the virtual machine as if you were sitting in front of it.

What are containers?

Containers are a virtualization environment for running applications. Just like virtual machines, containers are run on top of a host operating system but unlike VMs, they don’t include an operating system for the apps running inside the container. Instead, containers bundle the libraries and components needed to run the application and use the existing host OS running the container. For example, if five containers are running on a server with a specific Linux kernel, all five containers and the apps within them share that same Linux kernel.

Containers provide a consistent, isolated execution environment for applications. They’re similar to VMs except they don’t require a guest operating system. Instead, the application and all its dependencies is packaged into a “container” and then a standard runtime environment is used to execute the app. This allows the container to start up in just a few seconds, because there’s no OS to boot and initialize. You only need the app to launch.

Multiple containers can be run on a single machine, and containers can be moved between machines. The portability of the container makes it easy for applications to be deployed in multiple environments, either on-premises or in the cloud, often with no changes to the application.

If you wish to run multiple instances of an application on a single virtual machine, containers are an excellent choice. The container orchestrator can start, stop, and scale out application instances as needed.

Virtual Machines virtualize the base hardware whereas containers virtualize the Operating System.

What is Azure App Service?

Azure App Service is a platform-as-a-service (PaaS) offering in Azure that enables you to build and host web apps, background jobs, mobile backends, and RESTful APIs in the programming language of your choice without managing infrastructure. It offers automatic scaling and high availability. App Service supports both Windows and Linux, and enables automated deployments from GitHub, Azure DevOps, or any Git repo to support a continuous deployment model.

Azure App Service is an HTTP-based service that enables you to build and host many types of web-based solutions without managing infrastructure. For example, you can host web apps, mobile back ends, and RESTful APIs in several supported programming languages. Applications developed in .NET, .NET Core, Java, Ruby, Node.js, PHP, or Python can run in and scale with ease on both Windows and Linux-based environments.

An App Service plan is the container for your app. The App Service plan settings will determine the location, features, cost and compute resources associated with your app.

You pay for the Azure compute resources your app uses while it processes requests based on the App Service Plan you choose. The App Service plan determines how much hardware is devoted to your host - for example, whether it’s dedicated or shared hardware, and how much memory is reserved for it. There is even a free tier you can use to host small, low-traffic sites.

What is Serverless Computing?

Serverless computing is a cloud-hosted execution environment that runs your code but completely abstracts the underlying hosting environment. You create an instance of the service, and you add your code; no infrastructure configuration or maintenance is required, or even allowed.

Serverless computing lets you run application code without creating, configuring, or maintaining a server. The core idea is that your application is broken into separate functions that run when triggered by some action. This is ideal for automated tasks - for example, you can build a serverless process that automatically sends an email confirmation after a customer makes an online purchase.

The serverless model differs from VMs and containers in that you only pay for the processing time used by each function as it executes. VMs and containers are charged while they’re running - even if the applications on them are idle. This architecture doesn’t work for every app - but when the app logic can be separated to independent units, you can test them separately, update them separately, and launch them in microseconds, making this approach the fastest option for deployment.

Serverless computing abstracts the servers you run on. You never explicitly reserve server instances; the platform manages that for you. Each function execution can run on a different compute instance, and this execution context is transparent to the code. With serverless architecture, you simply deploy your code, which then runs with high availability.

Serverless computing is also event driven. Based on the events specific functions are executed and you are charged only for those function run times.

Azure Container Instance (ACI):

Azure’s product to manage containers is called azure container instances (ACI). It is a Paas service and it is the fastest way to run a container in azure. It is a PaaS offering that allows you to upload your containers , which it then will run for you.

Azure Kubernetes Service (AKS):

The task of automating and managing a large number of containers and how they interact is known as orchestration. Kubernetes is open-source orchestration software for deploying, managing and scaling containers. A container orchestration service for managing large number of containers.

Azure Kubernetes Service (AKS) manages your hosted Kubernetes environment, making it quick and easy to deploy and manage containerized applications without container orchestration expertise. It also eliminates the burden of ongoing operations and maintenance by provisioning, upgrading, and scaling resources on-demand, without taking your applications offline.

MicroServices:

The central idea behind microservices is that some types of applications become easier to build and maintain when they are broken down into smaller, composable pieces which work together. Each component is continuously developed and separately maintained, and the application is then simply the sum of its constituent components. This is in contrast to a traditional, “monolithic” application which is all developed all in one piece.

Applications built as a set of modular components are easier to understand, easier to test, and most importantly easier to maintain over the life of the application. It enables organizations to achieve much higher agility and be able to vastly improve the time it takes to get working improvements to production. This approach has proven to be superior, especially for large enterprise applications which are developed by teams of geographically and culturally diverse developers.

Containers are often used to create solutions using a microservice architecture. This architecture is where you break solutions (applications) into smaller, independent pieces. For example, you may split a website into a container hosting your front end, another hosting your back end, and a third for storage. This split allows you to separate portions of your app into logical sections that can be maintained, scaled, or updated independently.

Azure Networking:

It allows you to connect your on-premises environment to cloud securely over the internet.

https://docs.microsoft.com/en-us/azure/networking/networking-overview

Latency refers to the time it takes for data to travel over the network. Latency is typically measured in milliseconds. Bandwidth refers to the amount of data that can fit on the connection. Latency refers to the time it takes for that data to reach its destination.

Azure Virtual Network:

A virtual network is a logically isolated network on Azure. A virtual network allows Azure resources to securely communicate with each other, the internet, and on-premises networks. A virtual network is scoped to a single region; however, multiple virtual networks from different regions can be connected together using virtual network peering. Virtual networks can be segmented into one or more subnets. Subnets help you organize and secure your resources in discrete sections.

Azure manages the physical hardware for you. You configure virtual networks and gateways through software, which enables you to treat a virtual network just like your own network. You choose which networks your virtual network can reach, whether that’s the public internet or other networks in the private IP address space.

Peering is connecting virtual networks in Azure. Peering doesn’t encrypt traffic between them. Under Network watcher in Azure portal, check topology tab to view how traffic is routed.

Azure Load Balancer:

Availability refers to how long your service is up and running without interruption. High availability, or highly available, refers to a service that’s up and running for a long period of time. A load balancer distributes traffic evenly among each system in a pool. A load balancer can help you achieve both high availability and resiliency.

The load balancer receives the user’s request and directs the request to one of the VMs in your VM pool. If a VM is unavailable or stops responding, the load balancer stops sending traffic to it. The load balancer then directs traffic to one of the responsive servers. Load balancing enables you to run maintenance tasks without interrupting service. For example, you can stagger the maintenance window for each VM. During the maintenance window, the load balancer detects that the VM is unresponsive, and directs traffic to other VMs in the pool.

With Azure Load Balancer, you can scale your applications and create high availability for your services. Load Balancer supports inbound and outbound scenarios providing low latency and high throughput and scale up to millions of flows for all TCP and UDP applications. Load Balancer supports inbound and outbound scenarios, provides low latency and high throughput, and scales up to millions of flows for all Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) applications. You can use Load Balancer with incoming internet traffic, internal traffic across Azure services, port forwarding for specific traffic, or outbound connectivity for VMs in your virtual network.

Load Balancer distributes new inbound flows that arrive on the Load Balancer’s frontend to backend pool instances, according to rules and health probes.

Note: Azure Load Balancer distributes traffic within the same region to make your services more highly available and resilient.

https://docs.microsoft.com/en-us/azure/load-balancer/load-balancer-overview

Azure VPN Gateway:

Azure VPN gateways provide cross-premises connectivity between customer premises and Azure. A VPN gateway is a specific type of virtual network gateway that is used to send encrypted traffic between an Azure virtual network and an on-premises location over the public Internet. You can also use a VPN gateway to send encrypted traffic between Azure virtual networks over the Microsoft network.

https://docs.microsoft.com/en-us/azure/vpn-gateway/vpn-gateway-about-vpngateways

Azure Application Gateway:

If all your traffic is HTTP, a potentially better option is to use Azure Application Gateway. Application Gateway is a load balancer designed for web applications. It uses Azure Load Balancer at the transport level (TCP) and applies sophisticated URL-based routing rules to support several advanced scenarios.

Azure Application Gateway is a web traffic load balancer that enables you to manage traffic to your web applications. Traditional load balancers operate at the transport layer (OSI layer 4 - TCP and UDP) and route traffic based on source IP address and port, to a destination IP address and port.

https://docs.microsoft.com/en-us/azure/application-gateway/overview

Here are some of the benefits of using Azure Application Gateway over a simple load balancer:

- Cookie affinity. Useful when you want to keep a user session on the same backend server.

- SSL termination. Application Gateway can manage your SSL certificates and pass unencrypted traffic to the backend servers to avoid encryption/decryption overhead. It also supports full end-to-end encryption for applications that require that.

- Web application firewall. Application gateway supports a sophisticated firewall (WAF) with detailed monitoring and logging to detect malicious attacks against your network infrastructure.

- URL rule-based routes. Application Gateway allows you to route traffic based on URL patterns, source IP address and port to destination IP address and port. This is helpful when setting up a content delivery network.

- Rewrite HTTP headers. You can add or remove information from the inbound and outbound HTTP headers of each request to enable important security scenarios, or scrub sensitive information such as server names.

Azure Load balancer and Azure Application Gateway might look similar. Check below image from stackoverflow to know the differences.

Azure Traffic Manager:

Azure Load Balancer distributes traffic within the same region to make your services more highly available and resilient. Traffic Manager works at the DNS level, and directs the client to a preferred endpoint. This endpoint can be to the region that’s closest to your user.

Azure Traffic Manager is a DNS-based traffic load balancer that enables you to distribute traffic optimally to services across global Azure regions, while providing high availability and responsiveness.

Traffic Manager uses DNS to direct client requests to the most appropriate service endpoint based on a traffic-routing method and the health of the endpoints. An endpoint is any Internet-facing service hosted inside or outside of Azure. Traffic Manager provides a range of traffic-routing methods and endpoint monitoring options to suit different application needs and automatic failover models. Traffic Manager is resilient to failure, including the failure of an entire Azure region.

Note:

Azure provides a suite of fully managed load-balancing solutions for your scenarios. If you are looking for Transport Layer Security (TLS) protocol termination (“SSL offload”) or per-HTTP/HTTPS request, application-layer processing, review Application Gateway. If you are looking for regional load balancing, review Load Balancer. Your end-to-end scenarios might benefit from combining these solutions as needed.

Compare Load Balancer to Traffic Manager

Azure Load Balancer distributes traffic within the same region to make your services more highly available and resilient. Traffic Manager works at the DNS level, and directs the client to a preferred endpoint. This endpoint can be to the region that’s closest to your user.

Load Balancer and Traffic Manager both help make your services more resilient, but in slightly different ways. When Load Balancer detects an unresponsive VM, it directs traffic to other VMs in the pool. Traffic Manager monitors the health of your endpoints. In contrast, when Traffic Manager finds an unresponsive endpoint, it directs traffic to the next closest endpoint that is responsive. Traffic manager chooses the endpoint that’s closest to the user’s DNS server.

Content Delivery Network:

A content delivery network (CDN) is a distributed network of servers that can efficiently deliver web content to users. It is a way to get content to users in their local region to minimize latency. CDN can be hosted in Azure or any other location. You can cache content at strategically placed physical nodes across the world and provide better performance to end users. Typical usage scenarios include web applications containing multimedia content, a product launch event in a particular region, or any event where you expect a high-bandwidth requirement in a region.

A content delivery network (CDN) is a distributed network of servers that can efficiently deliver web content to users. CDNs store cached content on edge servers in point-of-presence (POP) locations that are close to end-users, to minimize latency.

https://docs.microsoft.com/en-us/azure/cdn/cdn-overview

Connectivity Options to Azure:

Point-to-Site Connection:

You use a Point-to-Site (P2S) VPN gateway to create a secure connection to your virtual network from an individual client computer. Point-to-Site VPN connections are useful when you want to connect to your VNet from a remote location. When you have only a few clients that need to connect to a VNet, a P2S VPN is a useful solution to use instead of a Site-to-Site VPN. A P2S VPN connection is established by starting it from the client computer.

Site-to-Site Connection:

A Site-to-Site VPN gateway connection is used to connect your on-premises network to an Azure virtual network over an IPsec/IKE (IKEv1 or IKEv2) VPN tunnel. This type of connection requires a VPN device located on-premises that has an externally facing public IP address assigned to it.

ExpressRoute:

ExpressRoute lets you extend your on-premises networks into the Microsoft cloud over a private connection facilitated by a connectivity provider. With ExpressRoute, you can establish connections to Microsoft cloud services, such as Microsoft Azure and Office 365.

Connectivity can be from an any-to-any (IP VPN) network, a point-to-point Ethernet network, or a virtual cross-connection through a connectivity provider at a co-location facility. ExpressRoute connections do not go over the public Internet. This allows ExpressRoute connections to offer more reliability, faster speeds, consistent latencies, and higher security than typical connections over the Internet.

VNet Peering:

Virtual network peering enables you to seamlessly connect networks in Azure Virtual Network. The virtual networks appear as one for connectivity purposes. The traffic between virtual machines uses the Microsoft backbone infrastructure. Like traffic between virtual machines in the same network, traffic is routed through Microsoft’s private network only.

Azure supports the following types of peering:

- Virtual network peering: Connect virtual networks within the same Azure region.

- Global virtual network peering: Connecting virtual networks across Azure regions.

Azure Storage Services:

Azure Storage is a Microsoft-managed service providing cloud storage that is highly available, secure, durable, scalable, and redundant. Azure Storage includes Azure Blobs (objects), Azure Data Lake Storage Gen2, Azure Files, Azure Queues, and Azure Tables.

https://docs.microsoft.com/en-us/azure/storage/

Azure Storage:

What is Block Storage

Block storage devices provide fixed-sized raw storage capacity. Each storage volume can be treated as an independent disk drive and controlled by an external server operating system. This block device can be mounted by the guest operating system as if it were a physical disk. The most common examples of Block Storage are SAN, iSCSI, and local disks.

Block storage is the most commonly used storage type for most applications. Block storage can be either locally or network-attached. Block storage devices typically are formatted with a file system like FAT32, NTFS, EXT3, and EXT4.

What is Object Storage

Block storage volumes can only be accessed when they’re attached to an operating system. But data kept on object storage devices, which consist of the object data and metadata, can be accessed directly through APIs or Http/https. You can store any kind of data, photos, videos, and log files. The object store guarantees that the data will not be lost. Object storage data can be replicated across different data centers and offer simple web services interfaces for access.

Under Azure Storage we have three storage categories:

- Structured data. Structured data is data that adheres to a schema, so all of the data has the same fields or properties. Structured data can be stored in a database table with rows and columns. Structured data relies on keys to indicate how one row in a table relates to data in another row of another table. Structured data is also referred to as relational data, as the data’s schema defines the table of data, the fields in the table, and the clear relationship between the two. Structured data is straightforward in that it’s easy to enter, query, and analyze. All of the data follows the same format. Examples of structured data include sensor data or financial data.

- Semi-Structured Data. Semi-structured data doesn’t fit neatly into tables, rows, and columns. Instead, semi-structured data uses tags or keys that organize and provide a hierarchy for the data. Semi-structured data is also referred to as non-relational or NoSQL data.

- Unstructured Data. Unstructured data encompasses data that has no designated structure to it. This also means that there are no restrictions on the kinds of data it can hold. For example, a blob can hold a PDF document, a JPG image, a JSON file, video content, etc. As such, unstructured data is becoming more prominent as businesses try to tap into new data sources.

Azure Blob Storage:

Azure Blob Storage is unstructured, meaning that there are no restrictions on the kinds of data it can hold. Blobs are highly scalable and apps work with blobs in much the same way as they would work with files on a disk, such as reading and writing data. Blob Storage can manage thousands of simultaneous uploads, massive amounts of video data, constantly growing log files, and can be reached from anywhere with an internet connection.

Blobs aren’t limited to common file formats. A blob could contain gigabytes of binary data streamed from a scientific instrument, an encrypted message for another application, or data in a custom format for an app you’re developing.

Azure Blob storage lets you stream large video or audio files directly to the user’s browser from anywhere in the world. Blob storage is also used to store data for backup, disaster recovery, and archiving. It has the ability to store up to 8 TB of data for virtual machines.

Azure Blob storage is Microsoft’s object storage solution for the cloud. Blob storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that does not adhere to a particular data model or definition, such as text or binary data.

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blobs-overview

Disk storage:

Disk storage provides disks for virtual machines, applications, and other services to access and use as they need, similar to how they would in on-premises scenarios. Disk storage allows data to be persistently stored and accessed from an attached virtual hard disk. The disks can be managed or unmanaged by Azure, and therefore managed and configured by the user. Typical scenarios for using disk storage are if you want to lift and shift applications that read and write data to persistent disks, or if you are storing data that is not required to be accessed from outside the virtual machine to which the disk is attached.

Disks come in many different sizes and performance levels, from solid-state drives (SSDs) to traditional spinning hard disk drives (HDDs), with varying performance abilities.

When working with VMs, you can use standard SSD and HDD disks for less critical workloads, and premium SSD disks for mission-critical production applications. Azure Disks have consistently delivered enterprise-grade durability, with an industry-leading ZERO% annualized failure rate.

An Azure managed disk is a virtual hard disk (VHD). You can think of it as a physical disk in an on-premises server but, virtualized. Azure managed disks are stored as page blobs, which are a random IO storage object in Azure. When you select to use Azure managed disks with your workloads, Azure creates and manages the disk for you. The available types of disks are Ultra Solid State Drives (SSD), Premium SSD, Standard SSD, and Standard Hard Disk Drives (HDD). Azure Disk storage provides disks for VMs, applications and other services.

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/managed-disks-overview

Check this article to decide which storage to choose: https://docs.microsoft.com/en-us/azure/storage/common/storage-decide-blobs-files-disks

Azure File Storage:

Azure Files offers fully managed file shares in the cloud that are accessible via the industry standard Server Message Block (SMB) protocol. Azure file shares can be mounted concurrently by cloud or on-premises deployments of Windows, Linux, and macOS. Additionally, Azure file shares can be cached on Windows Servers with Azure File Sync for fast access near where the data is being used. These file shares can be mounted in any windows, Linux or Mac-OS machines.

Applications running in Azure virtual machines or cloud services can mount a file storage share to access file data, just as a desktop application would mount a typical SMB share. Any number of Azure virtual machines or roles can mount and access the file storage share simultaneously. Typical usage scenarios would be to share files anywhere in the world, diagnostic data, or application data sharing.

https://docs.microsoft.com/en-in/azure/storage/files/storage-files-introduction

Exam tip: If you see any question on SMB protocol, asking to choose which storage system supports it, answer is Azure Files.

Check this short video on how to create azure files and access them: https://www.youtube.com/watch?v=ARRButlO3PM

Data Lake Storage:

The Data Lake feature allows you to perform analytics on your data usage and prepare reports. Data Lake is a large repository that stores both structured and unstructured data.

Azure Data Lake Storage combines the scalability and cost benefits of object storage with the reliability and performance of the Big Data file system capabilities.

Note: If you choose premium storage while creating storage account, you will get replication option. You can choose locally redundant or zone redundant or geo redundant and more.

Azure Disks and Files fall under IaaS. Containers, Tables, Queues fall under PaaS service.

Azure Queue:

Azure Queue storage is a service for storing large numbers of messages that can be accessed from anywhere in the world.

Azure Queue Storage can be used to help build flexible applications and separate functions for better durability across large workloads. When application components are decoupled, they can scale independently. Queue storage provides asynchronous message queuing for communication between application components, whether they are running in the cloud, on the desktop, on-premises, or on mobile devices.

Typically, there are one or more sender components and one or more receiver components. Sender components add messages to the queue, while receiver components retrieve messages from the front of the queue for processing. The following illustration shows multiple sender applications adding messages to the Azure Queue and one receiver application retrieving the messages.

You can use queue storage to:

- Create a backlog of work and to pass messages between different Azure web servers.

- Distribute load among different web servers/infrastructure and to manage bursts of traffic.

- Build resilience against component failure when multiple users access your data at the same time.

Storage tiers

Azure offers three storage tiers for blob object storage:

- Hot storage tier: optimized for storing data that is accessed frequently.

- Cool storage tier: optimized for data that is infrequently accessed and stored for at least 30 days.

- Archive storage tier: for data that is rarely accessed and stored for at least 180 days with flexible latency requirements. Costs are low for storing data, but for retrieving, you will be charged again.

Encryption and replication

Azure provides security and high availability to your data through encryption and replication features.

Encryption for storage services

The following encryption types are available for your resources:

- Azure Storage Service Encryption (SSE) for data at rest helps you secure your data to meet the organization’s security and regulatory compliance. It encrypts the data before storing it and decrypts the data before retrieving it. The encryption and decryption are transparent to the user.

- Client-side encryption is where the data is already encrypted by the client libraries. Azure stores the data in the encrypted state at rest, which is then decrypted during retrieval.

Replication for storage availability

A replication type is set up when you create a storage account. The replication feature ensures that your data is durable and always available. Azure provides regional and geographic replications to protect your data against natural disasters and other local disasters like fire or flooding.

Azure Storage redundancy

Azure Storage always stores multiple copies of your data so that it is protected from planned and unplanned events, including transient hardware failures, network or power outages, and massive natural disasters. Redundancy ensures that your storage account meets the Service-Level Agreement (SLA) for Azure Storage even in the face of failures.

Data in an Azure Storage account is always replicated three times in the primary region. Azure Storage offers two options for how your data is replicated in the primary region:

- Locally redundant storage (LRS) copies your data synchronously three times within a single physical location in the primary region. LRS is the least expensive replication option, but is not recommended for applications requiring high availability.

- Zone-redundant storage (ZRS) copies your data synchronously across three Azure availability zones in the primary region. For applications requiring high availability, Microsoft recommends using ZRS in the primary region, and also replicating to a secondary region.

Azure Storage offers two options for copying your data to a secondary region:

- Geo-redundant storage (GRS) copies your data synchronously three times within a single physical location in the primary region using LRS. It then copies your data asynchronously to a single physical location in the secondary region.

- Geo-zone-redundant storage (GZRS) copies your data synchronously across three Azure availability zones in the primary region using ZRS. It then copies your data asynchronously to a single physical location in the secondary region.

The primary difference between GRS and GZRS is how data is replicated in the primary region. Within the secondary location, data is always replicated synchronously three times using LRS. LRS in the secondary region protects your data against hardware failures.

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

Continue on Part 2: HERE

Want to learn more on Citrix Automations and solutions???

Subscribe to get our latest content by email.